Imagine a collection of N matrices numbered from 1 to N; all the matrices have the same size. In each entry of every matrix are written two elements: a payoff that Bill pays to Anne, and the number of the payoff matrix that Anne and Bill will play in the following day. The game is played as follows: At every day Anne and Bill face one of the matrices; Anne chooses a row, Bill chooses a column; this way an entry in the matrix is chosen. This entry determines that the amount that Bill pays to Anne and the matrix that the two will face tomorrow; the amount is deducted from Bill’s bank account and is added to Anne’s bank account.

The game that I just described is a stochastic game. Suppose that Anne would like to maximize the limit of her average payoff, and Bill wants to minimize this quantity.

If Anne and Bill observe the matrix that they face, then we are in the model of stochastic games studied by Mertens and Neyman, who proved that the value exists, it is equal to the limit of the discounted values, and they actually provided explicit epsilon-optimal strategies (which, unfortunately, cannot be computed efficiently).

Now suppose that Anne and Bill play in the dark: they do not observe the matrix that they face. They also do not observe each other choices or the amounts in their bank account. Nothing. Complete darkness. All that each of them knows is the structure of the game, and his/her past choices.

When payoffs are discounted the value exists. Indeed, the discounted payoff is a continuous function over the space of mixed strategies, and it is bilinear. A standard fixed point argument would deliver the existence of the value. But the payoffs are undiscounted.

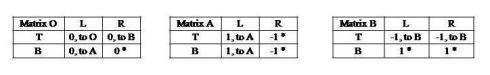

Not surprisingly, the answer in general is negative. The value need not exist. To see this, we will present the game “choose the largest integer” as a stochastic game played in the dark. So, suppose that there are three matrices, O, A and B, all have two rows and two columns (in fact, there will be three additional matrices that will be absorbing: once they are reached, the play never leaves them). The matrix O is the initial matrix, the matrix A corresponds to “Anne chose a number smaller than Bill” (Bill may have chosen infinity), and the matrix B corresponds to “Bill chose a number smaller than Anne” (Anne may have chosen infinity).

In the matrix O the payoff is 0 whatever Anne and Bill choose. An entry with an asterisk, for example, the entry (B,R) in matrix O, means that if this entry is chosen, the payoff is 0, and the payoff in all subsequent stages is 0 as well: the game moves to an absorbing matrix with payoff 0.

Let us verify that this game corresponds to the game “choose the largest integer. As long as the players choose (T,L) the plays remains in matrix O. If Anne chooses B before Bill chooses R, the play moves to matrix A, where Anne’s choices do not affect the payoff or the transitions. Hence a strategy of Anne reduces to the determination of the first time she chooses B. Similarly, a strategy of Bill reduces to the determination of the first time he chooses R. If Anne chooses B before Bill chooses R, the long-run average payoff is -1: Bill wins; If Anne chooses B after Bill chooses R, the long-run average payoff is 1: Anne wins; If they choose B and R at the same time, the long-run average payoff is 0: the outcome is a draw; If Anne chooses B in a finite time and Bill never chooses R, the long-run average payoff is 1: Anne wins; If Bill chooses R in a finite time and Anne never chooses B, the long-run average payoff is -1: Anne wins. Thus, this game is indeed the game of “choosing the largest integer”, which does not have a value.

But sometimes the value does exist. Two examples of classes of games where the value exists were given here. Roughly, suppose that the action sets of Anne and Bill coincide: the matrices are square matrices. Suppose also that the transitions are as follows: states are divided into two groups: matching states and non-matching states; the initial state is a matching state. In matching states, as long as Anne and Bill choose the same action, the play remains remain in the set of matching states; once Anne and Bill choose different actions, the play moves to a non-matching state. From non-matching states, the play moves to the initial state (which is a matching state). In such games, a player knows that either the other player matched him, and then he knows the other player’s action, and therefore the identity of the current state, or he knows that the other players did not match him, but then in the following stage the play will return to the initial state.

This is interesting, but there are very simple games that do not fall into the description from the previous paragraph, and therefore we do not know whether their value exists. For example, suppose that there are two matrices, and transitions among the two are general. Does the value exist? Anyone has a clue?

Recent Comments